CRO teams' dependency on devs: how to overcome the collaboration puzzle

Conversion Rate Optimization (CRO) teams are in charge of fine-tuning websites to push people closer to conversion. They're responsible for identifying and implementing changes that lead to more website visitors taking desired actions.

With user experience (UX), web analytics, psychology, and marketing skills, they do lots of experimentation, analysis, and tweaking to appeal to more customers. As a result, they wear too many hats.

Conversion rate optimization is all about speed and results. Learn how Croct can offer you speed and independence from developers.

To understand the multidisciplinary approach CRO teams apply to improving a brand's conversion rate, let's look at each step from their process:

- Researching and getting data to understand the user's behavior

- Formulating a hypothesis in light of the data gathered

- Making a list of priorities to help concentrate their efforts on the most critical and severe issues

- Implementing and testing their data analysis, hypothesis development, and prioritization to compare user experiences

- Reviewing test findings and creating more theories.

Next, we will discuss what skills are table stakes for CRO professional updating, and dive into the dependency on tech teams challenge these professionals face.

CRO professional updating

The marketing industry grows side by side with technology. Thus, it is essential that you acquire new abilities to keep relevant in the market.

Data analysis and statistics skills are not edge-cutting anymore. Frequent interaction with developers requires CRO professionals to understand not only key marketing terms, but also tech jargon. Many CRO professionals choose to bridge the gap between the two areas by learning basic programming concepts to gain more autonomy in testing and decrease dependency on developers.

In short, the combination of marketing and technology skills is essential for a CRO professional to be able to communicate and create an increasingly agile optimization cycle.

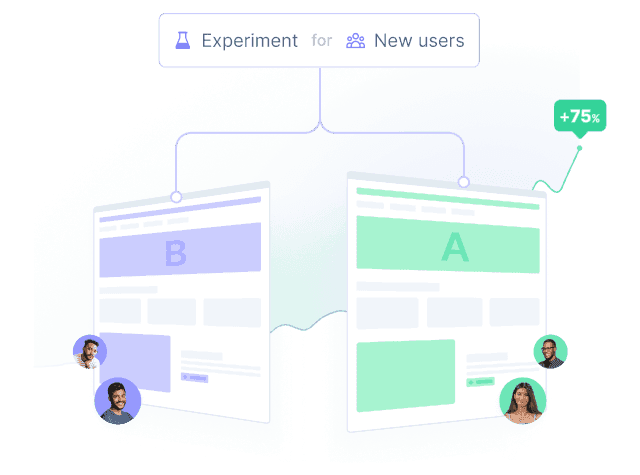

AB testing and the CRO scope

You probably already know that AB testing is an effective strategy for optimizing a website's conversion rate. However, CRO teams often rely on developers to run these tests. This is because AB testing requires changes to a website's code, which is outside the CRO scope.

As a CRO specialist or a marketer, it's essential that you know how to work with analytics platforms. You will need to analyze a lot of data to gain user behavior insights. However, implementing every test directly into code shouldn't be your job. So, how to deal with demands when your extra skills become the standout basis?

A lot of teamwork is necessary to define which tasks are essential, and what are the right tools to optimize the work without overloading stakeholders. You should also value giving and receiving assertive feedback to ensure that important tasks are carried out effectively.

Learning tech skills can increase your productivity as a CRO professional since the dependency on developers to run tests decreases. Still, this know-how should be paid as a distinction, not a baseline.

Companies are already adding programming knowledge as desirable in their CRO application forms. However, they need to make the benefits for professionals with these extra skills more clear. In addition, organizations that want to improve results should also be in charge of offering courses and professional updating for CRO specialists.

Developer dependency challenges

Most AB testing platforms today require developers to work on the initial code set up and implement the tests. They may need to create variations, write the code for tracking and recording data, and ensure they implement the tests correctly on their website.

That overburdens developers, who prefer to work on larger projects rather than small, daily website changes. For example, here's a scenario where the developer has to change the code three times and perform three deployments during AB testing:

1. Implementing the initial code

Suppose an e-commerce team wants to test different versions of its checkout CTA to see which one converts more visitors into customers. The developer writes the code for the checkout CTA and ensures it works correctly. This version serves as the AB test's control group.

2. Adding the AB test code

The developer then adds the code to implement the AB test and sets up two versions of the checkout CTA:

- Version A: identical to the control group

- Version B: different color or copy.

Next, they add the code to assign users to either Version A or Version B randomly and tracks specific metrics like conversion and cart abandonment rates.

3. Making changes based on the results

After running the AB test for a fixed period and collecting data on the performance of each version of the checkout CTA, you analyze the results. Suppose Version B performed better than Version A, with a higher conversion rate and lower cart abandonment rate. The developer would then make Version B the default checkout process.

In this scenario, the developer changes the code three times: once to implement the initial checkout CTA, once to set up the AB test, and once to make changes based on the test results. They potentially perform three deploys: one for the initial implementation, one for the test, and one to update the code for all users.

Limitations due to dependency

Depending on developers to keep coding and to deploy multiple versions can delay your launching tests. Developers are often busy with other tasks and may be unable to prioritize CRO-related work, especially if, as expected, you're consistently AB testing.

That leads to a slow optimization process, as you can only run one or two monthly tests at best. And at a time when you need more agility in your CRO process, the numbers don't add up.

Remember that AB testing is an ongoing process, with each test expanding upon the last one. So, adding agility to your CRO process lets you improve your website's conversion rate faster.

You need speed, flexibility, and responsiveness to make quick adjustments, adopt different strategies, and promptly respond to customer behavior changes. The agility in your process goes a long way in helping you improve your existing optimization efforts.

How to gain optimization speed

Croct's AB testing engine provides a simpler way to run continuous AB tests without depending on developers for daily changes. With Croct, you can make your website slots dynamic so you only need a developer for the initial setup and not to deploy each AB test. Then, you can run unlimited tests and set a winning variant as the default content for all audiences.

How it works

Suppose you want to improve your e-commerce website's product page to increase conversions. To begin, you'll want to set up dynamic slots on the pages. Slots are elements of the website's page you can edit without changing the entire page or disrupting the user experience. Examples are the product image, product description, or the CTA button.

Next, you run an AB test on the dynamic slots and analyze the result. Suppose Variant B performs better than Variant A, with higher conversion rates and lower bounce rates. You then set Variant B as the default content for all audiences. That means all product page visitors will see Variant B by default, which is optimized for maximum conversions.

With Croct's engine, you can create AB tests within an experience. So, if you have more than one experience running, you can create concurrent tests. That means testing multiple contents simultaneously, one for each audience.

When the experiments and tests are running, you can monitor your conversion rates with our dashboards to choose the most successful variants. And, as the website slots are dynamic, you can run unlimited tests and continually optimize it for maximum conversions.

At Croct, we use the Bayesian approach to interpret your results and determine the winning variant. Our engine calculates the metrics in real time as more data gets uploaded to the system.

Before we declare the winner, we observe metrics like conversion rate, probability to be best (PBB), uplift, and more. Also, we make sure the metrics are stable enough to draw a reliable conclusion. You can get more details about how our AB testing engine works here.

Wrapping up

Since you can easily AB test your website's content using our platform, you can significantly reduce your developer dependency. That makes the CRO process more agile, so you gain lots of optimization speed, leading to faster work and scalability.

Want to know more about how we can help you scale your AB testing? Create your free account and explore our platform.